Unveiling Insights through Topic Modeling: Analyzing Event Ticketing Reviews in South Africa

Introduction

In our previous blog posts, we delved into sentiment and emotional analysis of event ticketing reviews in South Africa. Now, we shift our focus …

Introduction

In our previous blog posts, we delved into sentiment and emotional analysis of event ticketing reviews in South Africa. Now, we shift our focus to uncovering the underlying topics discussed in each review. By automating the process of topic extraction, we aim to gain valuable insights into the key themes and subjects prevalent in customer reviews. In this blog post, we will explore three popular methods of topic modeling—LDA, Top2Vec, and Bertopic—and their application in analyzing event ticketing reviews.

Understanding Topic Modeling

Topic modeling is a powerful technique that automatically identifies topics within a collection of text documents. It enables us to discover latent themes and gain a deeper understanding of the content. By employing topic modeling algorithms, we can uncover the most significant topics discussed in event ticketing reviews, providing us with actionable insights to enhance our services.

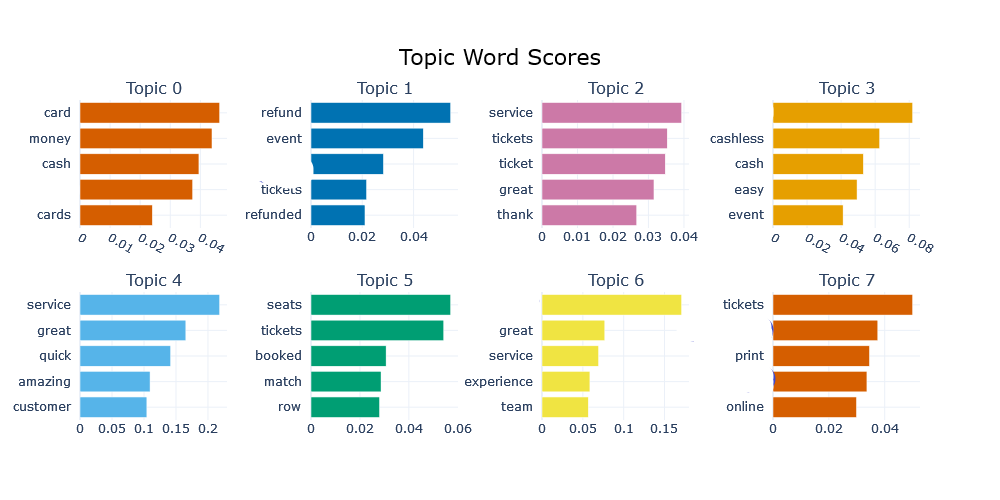

Bertopic

Bertopic leverages the power of transformer-based language models, such as BERT, to identify topics in a document collection. It employs a hierarchical clustering approach that groups similar documents into coherent topics. By leveraging pre-trained language models, Bertopic can capture intricate relationships between words and topics, leading to more accurate topic representations. This method enables us to gain a granular understanding of the diverse topics discussed in event ticketing reviews.

After applying the Bertopic technique to the reviews, we discovered 8 distinct topics. To maintain confidentiality, we have omitted the names of the event ticketing companies from the results. By analyzing the outcomes, we identified that Topic 1 revolves around refund-related concerns, with customers frequently expressing their queries or frustrations about refunds. Topics 2, 4, and 6 primarily relate to service-related matters. Conversely, topics 0 and 3 predominantly focus on financial aspects, such as payment methods and transactions associated with ticket purchases.

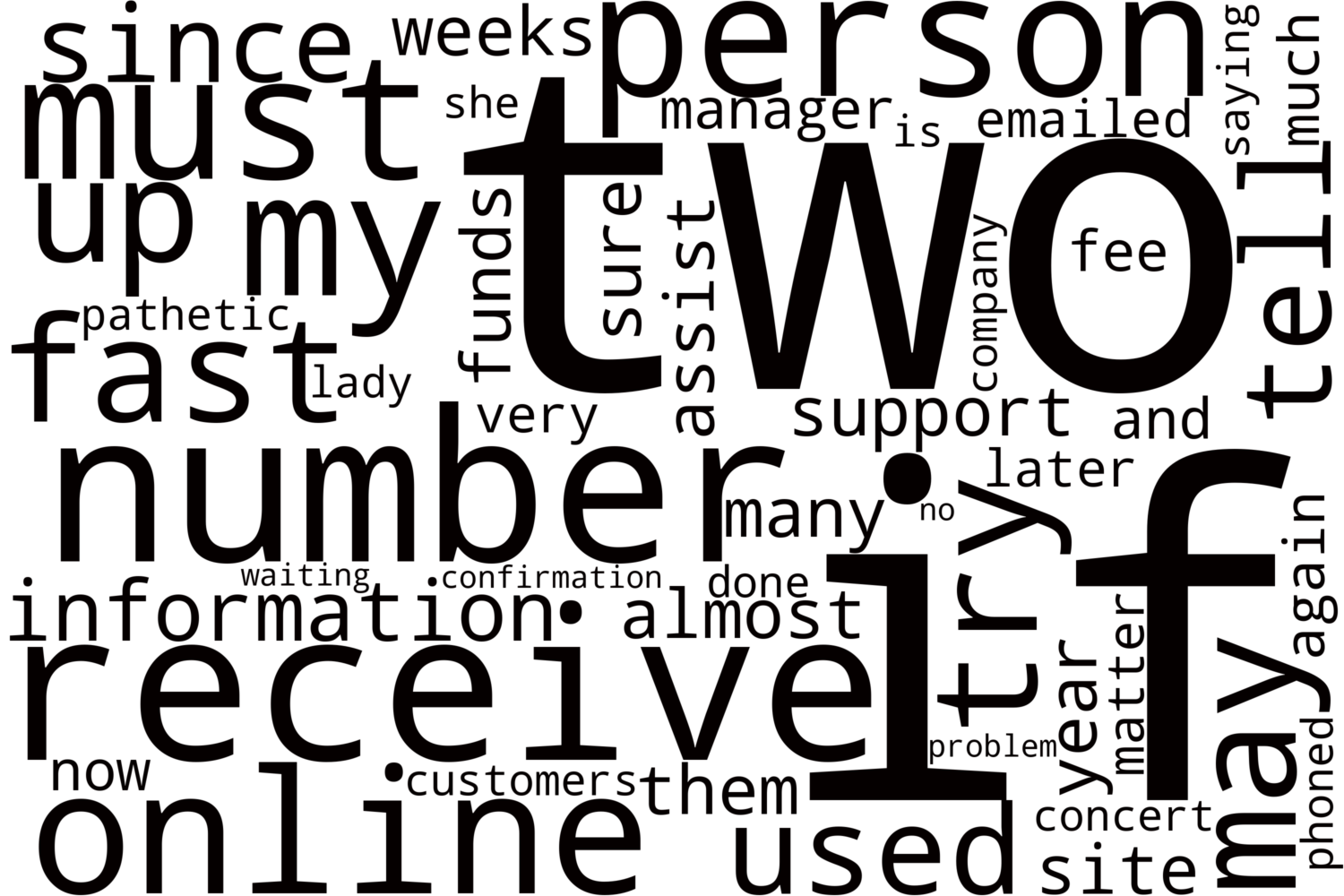

Top2Vec

Top2Vec is a relatively newer approach to topic modeling that combines the benefits of document clustering and topic modeling. This method represents each document in a high-dimensional vector space, where similar documents cluster together. By considering both semantic and contextual information, Top2Vec discovers topics based on the distribution of documents within the vector space. This technique allows us to capture more nuanced and diverse topics in event ticketing reviews.

Utilizing the top2vec technique, we obtained a single topic selection. However, unlike the bertopic modeling results, this topic lacked a clear interpretation. This serves as a limitation of topic modeling, as it can occasionally generate topics that are challenging to comprehend and interpret.

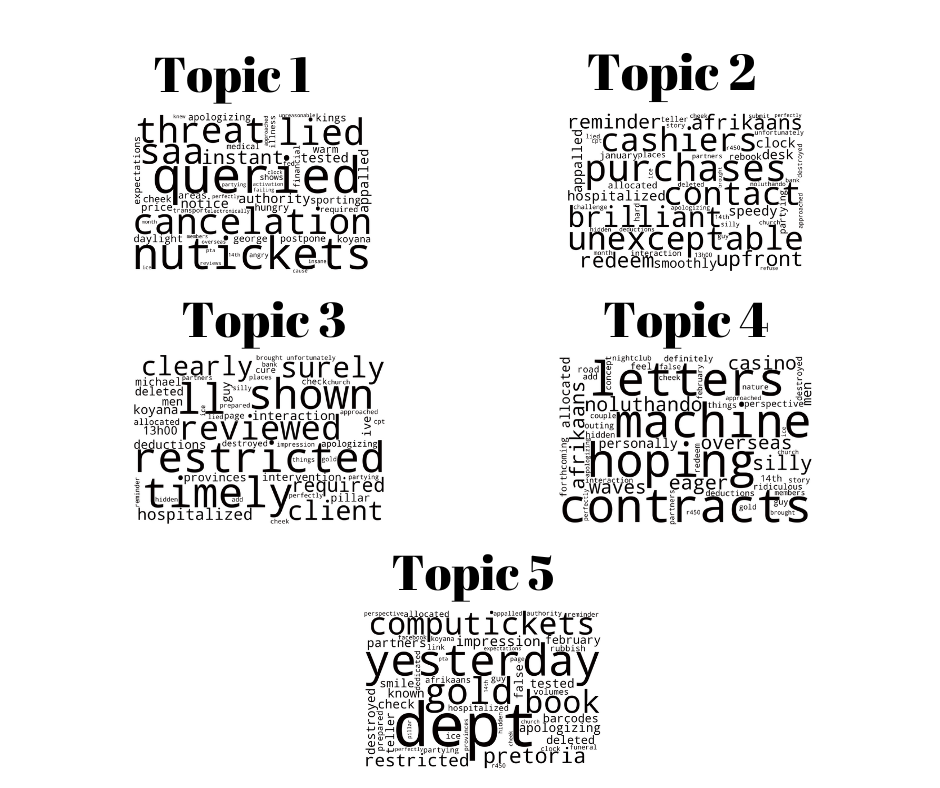

Latent Dirichlet Allocation (LDA)

LDA is one of the most widely used topic modeling algorithms. It assumes that each document is a mixture of various topics, and each word within the document is associated with a specific topic. LDA uncovers these latent topics by analyzing word frequencies and co-occurrences across the document corpus. Through this method, we can identify the most prevalent topics and their respective word distributions within the event ticketing reviews.

Unlike the other two models above, LDA requires that you set the number of topics to discover. In our case, we selected five. However, similar to the Top2vec approach, the results obtained from LDA were not easily interpretable.

Final Thoughts

In conclusion, this blog post explored three popular methods of topic modeling—LDA, Top2Vec, and Bertopic—and their application in analyzing event ticketing reviews in South Africa. Topic modeling proved to be a valuable technique for uncovering underlying themes and subjects discussed in customer reviews.